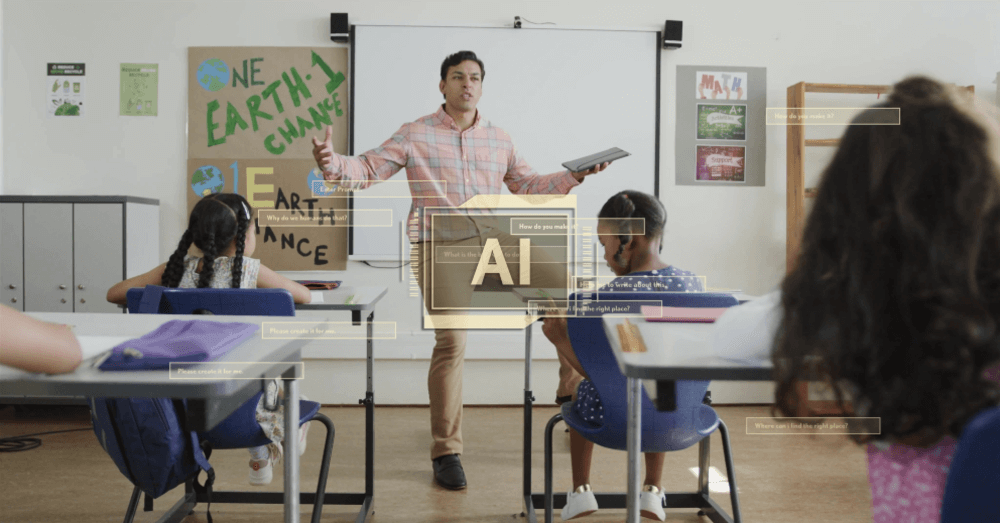

The conversation around tech education has shifted. A few years ago, teaching kids to code felt like the finish line. Now schools across the country are realizing that coding alone won’t cut it. Students need to understand artificial intelligence, and they need to understand it soon.

- Thirty-three states now have official guidance on AI use in schools, with most released within the past year.

- Teachers face the dual challenge of learning AI themselves while figuring out how to teach it responsibly.

- Organizations like Indiana’s Nextech are stepping up to train educators and create accessible AI curriculum for K-12 classrooms.

Why the Rush?

Walk into any middle school computer lab today and you’ll notice something interesting. The kids aren’t confused by ChatGPT. They’re already using it for homework, creative projects, and sometimes to cheat on essays. Meanwhile, many of their teachers are still trying to figure out what a large language model actually does.

This gap matters. According to the AI4K12 Initiative, students need to understand five big ideas about artificial intelligence before they graduate. These include how computers perceive the world, how machines learn from data, and how AI systems affect society. That’s a lot more nuanced than teaching someone to write a for-loop.

The U.S. Department of Education issued new guidance this year encouraging schools to weave AI literacy into existing subjects. Sounds straightforward until you realize most teachers never took a single AI course during their training. You can’t teach what you don’t know.

What Responsible AI Education Actually Looks Like

Throwing a ChatGPT subscription at students and calling it AI education misses the point entirely. Real AI literacy means teaching kids to question what they’re seeing. Where did the training data come from? Who might be left out? When should you trust the output, and when should you be skeptical?

Researchers at the University of Hong Kong put it well. They found that many existing AI curricula focused too heavily on technical skills while ignoring the human questions. Teaching a 12-year-old to prompt an image generator is easy. Teaching that same kid to recognize when AI-generated content might spread misinformation or perpetuate bias requires a different kind of thinking altogether.

Bryan Twarek from the Computer Science Teachers Association argues that AI literacy should include a technological citizenship component. Students need to see themselves as people who can shape how AI develops, as active participants rather than passive consumers of whatever Silicon Valley builds next.

Where Teachers Can Find Help

State-level initiatives are popping up everywhere, but some are further along than others. Indiana’s Nextech is one worth watching. Based in Indianapolis, the organization provides free professional development for K-12 educators throughout the state. Their CSPDWeek brings together hundreds of teachers each summer for hands-on training in everything from kindergarten coding activities to high school AI curriculum.

What makes Nextech different from a generic workshop? They partner with Code.org and other nationally recognized curriculum providers, but they localize everything for Indiana classrooms. Teachers walk away with actual lesson plans they can use the following week, along with a support network of fellow educators who are figuring this out together.

The American Federation of Teachers recently announced a similar effort called the National Academy for AI Instruction. Connecticut launched a pilot program this spring putting AI tools directly in the hands of seventh through twelfth graders across seven districts.

The Stakes Are Higher Than You Think

MIT professor Justin Reich puts it bluntly. Writing guidance on AI in schools today is like writing a guide to aviation in 1905. Nobody knows exactly where this technology is heading, but we can’t afford to wait until we figure it out.

Schools have gotten educational technology wrong before. Remember when everyone insisted students shouldn’t trust Wikipedia? That advice aged poorly. The pressure comes from all sides. Parents want their kids prepared for jobs that will require AI fluency. Colleges want incoming students who can question and evaluate technology. Teachers want training that doesn’t feel like another mandate dumped on their already overflowing plates.

Should Schools Move Fast or Get It Right?

There’s real tension here. Moving too slowly means graduating students unprepared for a world where AI touches nearly every profession. Moving too quickly risks teaching bad habits or widening existing gaps between schools with resources and those without.

The answer probably lies somewhere in the middle. Schools need to start somewhere, even without everything figured out. Teachers need ongoing support and room to experiment. Students need adults who are honest about the fact that nobody has all the answers yet.

The computer science race has become an AI literacy race. Schools that treat this as a sprint will probably stumble. Those that pace themselves, invest in their teachers, and stay focused on building thoughtful citizens will cross the finish line with students who can handle whatever comes next.